Deepfakes are now everywhere. From face-swaps to impersonations of political leaders and celebrities; spotting the difference between real and fake can be impossible. These digital impressions are already having real financial repercussions. A company in the U.S. has been allegedly scammed for 10 million USD using a CEO’s audio deepfake. Another company in the UK has been scammed in a similar method for more than 200 thousand Euros. Now with the U.S. 2020 elections being right around the corner, the potential for weaponizing deepfakes on social media has become quite real. Giant tech companies are already fighting back like Google, Facebook, Microsoft, and Twitter. Facebook has already spent more than 10 million USD since last year to help to detect and stop deepfakes.

What do deepfakes mean for businesses, and what’s really at stake here, is there anything being done to detect, regulate, and prevent them?

Deepfakes and Detection

How deepfakes are made?

There is more than one way to create a deepfake, but in principle they are similar. In a sentence, a deepfake is AI-generated footage (video or audio) of a real person, saying are doing fictional things. First, you need a source video and a destination video. A computer will use its deep neural network to learn the movements and sounds of two different recordings (your source and destination files). The AI will combine the two realistically.

The technology itself is nothing, Hollywood has been using it for years, for example, to cast deceased actors into critical roles. Like casting Paul Walking in fast and furious 7. Even gaming companies use it to allow players to control their favourite athletes. What is new, is that the process itself has become easier and considerably cheaper.

For the past 2 years, the deepfake industry has seen a boom, with the creation of online apps like FakeApp and Faceswaps. Today you can simply go online and choose from hundreds of available apps. All you need is at least 10 seconds of video and some audio recording to get started.

Now that hearing and seeing do not equate to believing anymore, what is really at stake here?

What’s at stake?

The fact that people can see a video like this believe that it’s true and collectively the market can react to it, is the most prominent concern.

The most recent attacks include the use of audio deepfakes to carry out financial scams. The security firm Symantec has reported several attempted scams at companies involving audio deepfakes of top-level executives. In one case a company was scammed for more than 10 million USD where a deepfake audio of the CEO requesting a transfer was used.

It’s not very different than the technology you would see using an Alexa or similar products. The deepfake CEO would be able to answer whatever questions you ask since they are being created in real-time.

On the other side of the spectrum, there are endless opportunities with deepfakes. The entertainment industry is already capitalizing on it. For example, an actor can license his “likeness” then at a very low cost the studio can produce various marketing materials. The studio simply uses the “likeness” of the actor without having to rely on the same level of heavy production. You can already see the voices of actors are being monetized for a series of applications.

Amazon’s Alexa did just that; last year in September 2019, Amazon announced that Alexa can now speak in celebrity voices such as Samuel L. Jackson

You can even see more examples of AI-generated influencers on platforms like Instagram, like lilmiquela. Such deepfakes are funded by silicon valley money, and their videos bring in millions of followers. This means a huge potential for generating a revenue stream, without having to pay talents to perform.

In China, a news agency has created an AI-generated news anchor that could deliver news 24/7.

Read here if you want to learn about the role of AI in Digitial Marketing.

Still, the potential for misuse is very high. One of the most insidious uses of the deepfakes in what is referred to as “revenge porn“. It is AI-generated pornography that someone published to get back at someone they believe has wronged them. This happens often with celebrities as well. Such videos that would ruin the reputation of somebody in the public eye is both worrying and a major source of concern for social media platforms.

However, the real concern is higher up in politics like the upcoming US 2020 Elections. Researchers from major Social Media platforms expect that it is very likely that deepfakes will be deployed during the 2020 elections. It is unclear whether the aim is to cause instability or push votes towards a specific candidate. However, you would end up with videos of candidates saying something completely outrageous, or false information that flames the market, etc. There is also a real threat of faking speeches from world leaders and international organizations that could have drastic consequences, economic, political, and social.

How is Social Media Giants Responding?

Social Media Platforms such as Facebook are a massive source for sharing information. The rise of cheap and quick deepfakes creates a real concern for these platforms, and more than often they end up in the headlines for either doing too much or too little.

Earlier this year, Nancy Pelosi the US House Speaker accused Facebook of being an accomplice in misleading the American People. Before that, last year in May 2019, she accused Facebook of spreading misinformation for refusing to take down a manipulative video of her. At the time Facebook responded by updating its content review policy and keeping the video up.

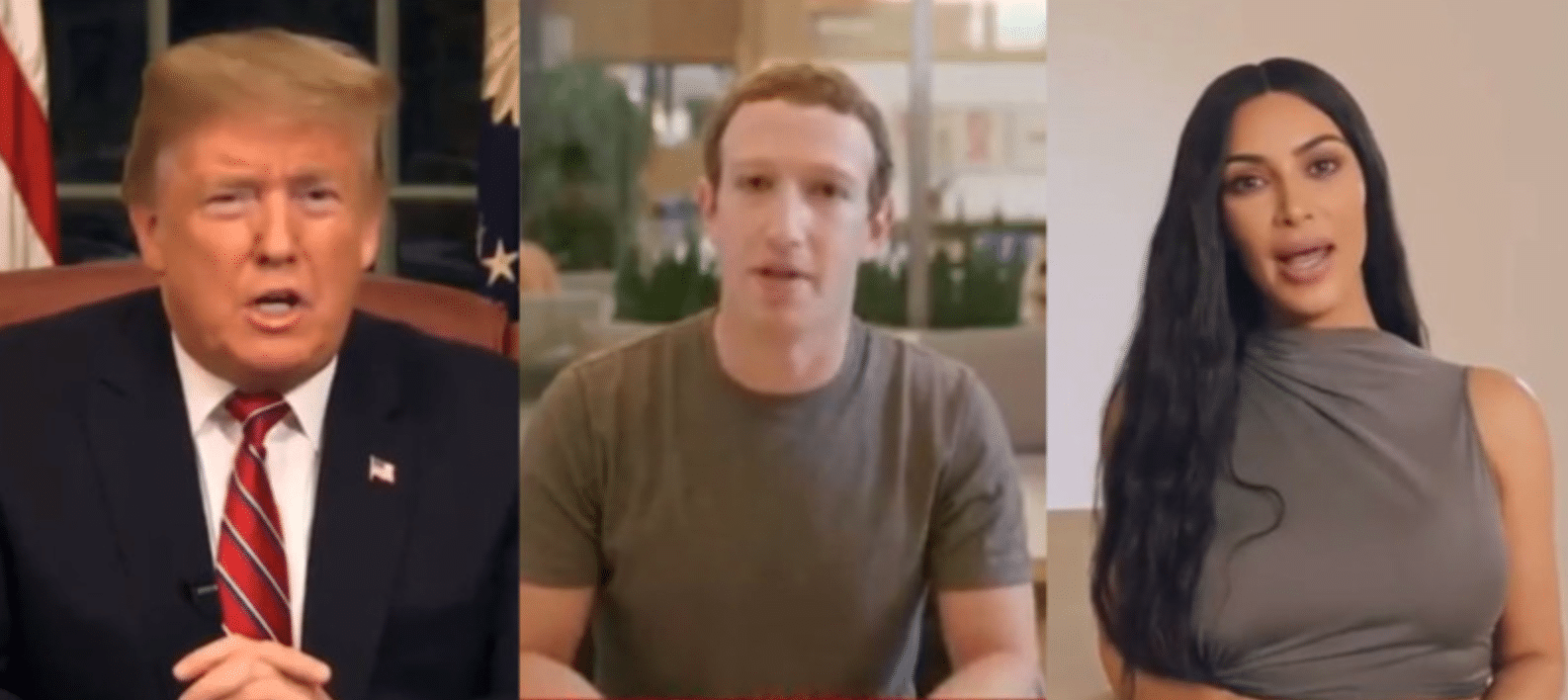

Since then, people have been testing Facebook’s decision and started uploading deepfake videos of personalities such as Facebook’s very own Mark Zugerberg, the sitting US president Trump and celebrity Kim Kardashian. Facebook did not take down any of the videos.

Now there’s a question though, about whether misinformation, whether these deepfakes are actually just a completely different category of thing from normal kind of false statements overall. And I think that there’s a very good case that they are.

Mark Zugerberg

Mark Zugerberg

However, now Facebook is trying to get ahead of deepfakes, and invested more than 10 million USD and partnered up with Microsoft to and released a deepfake detection challenge on Kaggle. Facebook will self create deepfake videos using actors for the challenge and selected participants will compete with each other to create open-source detection tools.

This step has actually put an incentive in the market for the detection of deepfakes, where previously the only available marketing was for creating them.

Twitter on the other side, being one of the fastest platforms for spreading news and misinformation, claims that it is already doubling down on misinformation using their policy violation.

Twitter enables the clarification of falshoods in realtime. We proactively enforce policies and use technology to halt the spread of content propagated through manipulated tactics.

Nick Pickles Twitter’s director of Public Policy Strategy

Twitter has also recently acquired a London based startup called Fabula AI. Fabula AI has a patented system called Geometric Deep Learning which uses sophisticated algorithms to detect the spread of misinformation online. Their AI delivers unbiased authenticity score for any piece of news and any in language.

Google / Youtube

Youtube is owned by Google, and it stands for regulating its policies. The community guidelines for Youtube prohibit deceptive practices with videos being continuously removed for any violations. Google itself has released its own program to advance the detection of fake audio. This included launching a Spoof challenge, that allowed researchers to submit their countermeasure against fake speech.

Detection software

Zero Fox is a cybersecurity company that launched an open-source tool that is already assisting algorithms to detect deepfakes. Their operation is based on gathering billions of content pieces (text, images, videos, etc.) monthly. As the content pieces flow throw their system it is rerouted to a deepfake detection that will state whether the content is deepfake or not. If it detects a deepfake it can send out an alert. Hence the company can monetize through alerting customers of deepfake content.

This is one example of a private approach to detecting deepfakes, meanwhile, governments and academic institutions are working towards other solutions.

One of these solutions is coming out of the Pentagon, and specifically DARPA the Defense Advanced Research Projects Agency. Their approach of combating deepfakes is by first creating one, and then finding methods to detect it. And hence both sides the ones detecting and the ones creating deepfakes are both heavily relying on AIs, however it seems that the creative team has a good headstart. Until now there isn’t one real technology that can detect and stop the spread of deepfakes.

Regulation

However, the problem is more complex than just the detection of deepfakes. Consider you have detected them, there isn’t any real legal recourse that can be taken to prevent or stop them. For the most part, it is legal to both research and create deepfakes.

If your a public figure, you pretty much don’t own the rights to your public appearances or any videos taken without your consent in public. The law is very unclear on this section, but mostly this regulation applies to regular people too who post their videos on Youtube and Facebook.

However, if your image or video is used to create adult content it will be likely illegal in some countries and areas. For example in California “revenge porn” is punishable by up to six months in jail and a thousand USD fine. Several porn websites have already declared that they will not allow hosting or uploading deepfake based porn.

Conclusion

For better or worse, deepfakes are not going anywhere and are here to stay. The challenge is whether the technology to detect and prevent them can keep up.

People who are creating deepfakes for nefarious reasons are way ahead in the game. Either because they have access to more resources, technology, or a higher incentive. This might be changing now with all these tech giants stepping in and trying to push for creating detection technologies.

This situation speaks of a deeper issue that is scarcely touched upon. Yes, deepfakes create a serious problem but are detecting and preventing them, a solution? Or just a treatment to a symptom? Can we really rely on technology to solve technological driven issues? Do you want to create an App for every problem we face? Should giant tech companies start reading up on moral-philosophy? Or do we as people collectively need to read up on these deep existential questions?

Whatever the case may be, whenever a new technology arises there will always be people that will manipulate and adapt them to pursue their own agenda whether good or bad. The collective responsibility falls on everybody.

Leave a comment with your thoughts below, or click here, if you want to learn more about this topic.